How Much Nitrogen Does Corn Get from Fertilizer? Less Than Farmers Think

Corn growers seeking to increase the amount of nitrogen taken up by their crop can adjust many aspects of fertilizer application, but recent studies from the University of Illinois Urbana-Champaign show those tweaks don’t do much to improve uptake efficiency from fertilizer. That’s because, the studies show, corn takes up the majority of its nitrogen — about 67 percent on average — from sources occurring naturally in soil, not from fertilizer.

The evidence for soil as corn’s major nitrogen source came repeatedly over the course of four studies, the first published in 2019 and the rest more recently. In all four studies, researchers labeled fertilizers with a naturally occurring isotope of nitrogen, known as 15N, and applied it in the field at different rates, forms, placements and timings.

After each harvest, the researchers analyzed corn biomass and grain for its nitrogen content, attributing labeled 15N to fertilizer and unlabeled nitrogen to soil sources. In all four studies, which included both poor and fertile soils in central Illinois, most of the nitrogen in corn at harvest was unlabeled.

“My hope would be that producers would just realize the magnitude of these numbers. They’re purchasing this nitrogen and it’s not all getting into the crop,” said Kelsey Griesheim, who completed the studies as a graduate student and is now an assistant professor at North Dakota State University. “It’s important to make them aware of it, so that when they’re looking at their bottom line and how much they’re spending on nitrogen, they realize the situation.”

Griesheim’s 2019 study found only 21 percent of fertilizer nitrogen made it into the grain when applied in the fall as anhydrous ammonia. The result made some sense, as fall-applied fertilizer lingers in the soil for months before corn is planted, and then has to last throughout the season to nourish the growing crop. Incidentally, the study also found nitrification inhibitors — often applied with anhydrous to slow transformation from ammonia to more-leachable nitrate — didn’t help to enhance nitrogen uptake from fertilizers.

Assuming pre-season and in-season application would achieve greater uptake than fall-applied nitrogen, Griesheim tried those tactics in her three more recent studies.

In planting season, Griesheim applied 15N-labeled urea-ammonium-nitrate (UAN) at planting in subsurface bands using 2 x 3 placement, surface dribble, and drag-chain applications at 80 pounds per acre. Reaching up to 46 percent 15N content in corn biomass, banded placement was more efficient than broadcast fertilization, which only reached 34 percent in the most optimal sites.

“No question, banding is more efficient than broadcasting nitrogen. That was very clear from the data,” Griesheim said. “However, whether we applied one band or two bands, or whether we used the 2 x 3 placement or a drag chain, there weren’t a lot of differences in efficiency.”

Griesheim also tested fertilizer placement during in-season growth, or sidedressing, applying 200 pounds per acre of 15N-labeled UAN with a Y-drop attachment that delivers liquid fertilizer at the base of a growing corn stalk. In this case, Griesheim split the application between planting and the V9 growth stage. She compared the Y-drop application against subsurface placement at both growth stages.

“When split between two application times, 15N uptake was higher at sidedressing than at planting, but even when applying in-season, more nitrogen was derived from soil than fertilizer [averaging 26 percent in grain and 31 percent in biomass from fertilizer],” Griesheim said. “We didn’t see a difference between the Y-drop and subsurface applications for five of the six study years, but under conditions conducive to volatilization, uptake was greater with subsurface applications.”

Finally, Griesheim labeled multiple fertilizer forms — UAN, potassium nitrate and liquid urea — with 15N and applied them as surface sidedress applications with a Y-drop applicator. Surprisingly, uptake was greatest when fertilizer was applied as potassium nitrate, followed by UAN, then urea.

“It was interesting that nitrate emerged as the most efficient of the three sources, despite weather conditions that were fairly conducive to nitrogen loss by leaching or denitrification,” said Richard Mulvaney, professor at U. of I. and co-author on all four papers. “Laboratory incubation experiments that were part of the same study showed this was due to ammonia volatilization and immobilization by soil microbes.”

The full body of work suggests there are things farmers can do to increase nitrogen uptake from fertilizers: namely, apply nitrate-based sources in-season while the crop is actively growing. But the recurring lesson that soil supplies the greatest amount of nitrogen to corn is an important one that should lead to changes in nitrogen management, the researchers say.

“If the soil is the main source of nitrogen for crop uptake, which it almost always will be, we need to take the soil into account. It’s just that simple. Otherwise, with factors like timing, rate, placement and form, we’re tweaking, but probably won’t find a miraculous increase in efficiency using those approaches,” Mulvaney said. “We really should go toward adjusting rate according to the soil and the soil-supplying power, going towards variable-rate nitrogen.”

Overapplying nitrogen that doesn’t make it into the crop not only affects farmers’ bottom lines; the excess can leach into waterways or transform into greenhouse gases, adding to agriculture’s environmental footprint.

“Using fertilizer nitrogen uptake efficiency as a means of ranking fertilizer practices makes a lot of sense,” Griesheim said. “More fertilizer in the crop is good for the farmer, but it also means less fertilizer left in the soil, which is good for taxpayers and surrounding ecosystems. It’s a win-win.”

The four studies are published in the Soil Science Society of America Journal.

New Study Reveals Irrigation’s Mixed Effects Around the World

A new study by an international team of researchers shows how irrigation affects regional climates and environments around the world, illuminating how and where the practice is both untenable and beneficial.

The analysis, which appears in the journal Nature Reviews Earth and Environment, also points to ways to improve assessments in order to achieve sustainable water use and food production in the future.

“Even though irrigation covers a small fraction of the earth, it has a significant impact on regional climate and environments — and is either already unsustainable, or verging on towards scarcity, in some parts of the world,” explains Sonali Shukla McDermid, an associate professor in NYU’s Department of Environmental Studies and the paper’s lead author. “But because irrigation supplies 40 percent of the world’s food, we need to understand the complexities of its effects so we can reap its benefits while reducing negative consequences.”

Irrigation, which is primarily used for agricultural purposes, accounts for roughly 70 percent of global freshwater extractions from lakes, rivers and other sources, amounting to 90 percent of the world’s water usage. Previous estimates suggest that just under 1.4 million square miles of the earth’s land are currently irrigated. Several regions, including the U.S. High Plains states, such as Kansas and Nebraska, California’s Central Valley, the Indo-Gangetic Basin spanning several South Asian countries, and northeastern China, are the world’s most extensively irrigated and also display among the strongest irrigation impacts on the climate and environment.

While work exists to document some impacts of irrigation on specific localities or regions, it’s been less clear if there are consistent and strong climate and environmental impacts across global irrigated areas — both now and in the future.

To address this, 38 researchers analyzed more than 200 previous studies — an examination that captured both present-day effects and projected future impacts.

Their review pointed to both positive and negative effects of irrigation, including the following:

- Irrigation can cool daytime temperatures substantially and can also change how agroecosystems store and cycle carbon and nitrogen. While this cooling can help combat heat extremes, irrigation water can also humidify the atmosphere and can result in the release of greenhouse gasses, such as powerful methane from rice.

- The practice annually withdraws an estimated 648 cubic miles from freshwater sources, which is more water than is held by Lake Erie and Lake Ontario combined. In many areas, this usage has reduced water supplies, particularly groundwater, and has also contributed to the runoff of agricultural inputs, such as fertilizers, into water supplies.

- Irrigation can also impact precipitation in some areas, depending on the locale, season and prevailing winds.

The researchers also propose ways to improve irrigation modeling — changes that would result in methods to better assess ways to achieve sustainable water and food production into the future. These largely center on adopting more rigorous testing of models as well as more and better ways of identifying and reducing uncertainties associated with both the physical and chemical climate processes and — importantly — human decision-making. The latter could be done with more coordination and communication between scientists and water stakeholders and decision-makers when developing irrigation models.

“Such assessments would allow scientists to more comprehensively investigate interactions between several simultaneously changing conditions, such as regional climate change, biogeochemical cycling, water resource demand, food production and farmer household livelihoods — both now and in the future,” McDermid observed.

Microbes Key to Sequestering Carbon in Soil

Microbes are by far the most important factor in determining how much carbon is stored in the soil, according to a new study with implications for mitigating climate change and improving soil health for agriculture and food production.

The research is the first to measure the relative importance of microbial processes in the soil carbon cycle. The study’s authors found that the role microbes play in storing carbon in the soil is at least four times more important than any other process, including decomposition of biomatter.

That’s important information: Earth’s soils hold three times more carbon than the atmosphere, creating a vital carbon sink in the fight against climate change.

The study, published in Nature, describes a novel approach to better understanding soil carbon dynamics by combining a microbial computer model with data assimilation and machine learning to analyze big data related to the carbon cycle.

The method measured microbial carbon use efficiency, which tells how much carbon was used by microbes for growth versus how much was used for metabolism. When used for growth, carbon becomes sequestered by microbes in cells and ultimately in the soil, and when used for metabolism, carbon is released as a side product in the air as carbon dioxide, where it acts as a greenhouse gas. Ultimately, growth of microbes is more important than metabolism in determining how much carbon is stored in the soil.

“This work reveals that microbial carbon use efficiency is more important than any other factor in determining soil carbon storage,” said Yiqi Luo, the paper’s senior author.

The new insights point agricultural researchers toward studying farm management practices that may influence microbial carbon use efficiency to improve soil health, which also helps ensure greater food security. Future studies may investigate steps to increase overall soil carbon sequestration by microbes. Researchers may also study how different types of microbes and substrates (such as those high in sugars) may influence soil carbon storage.

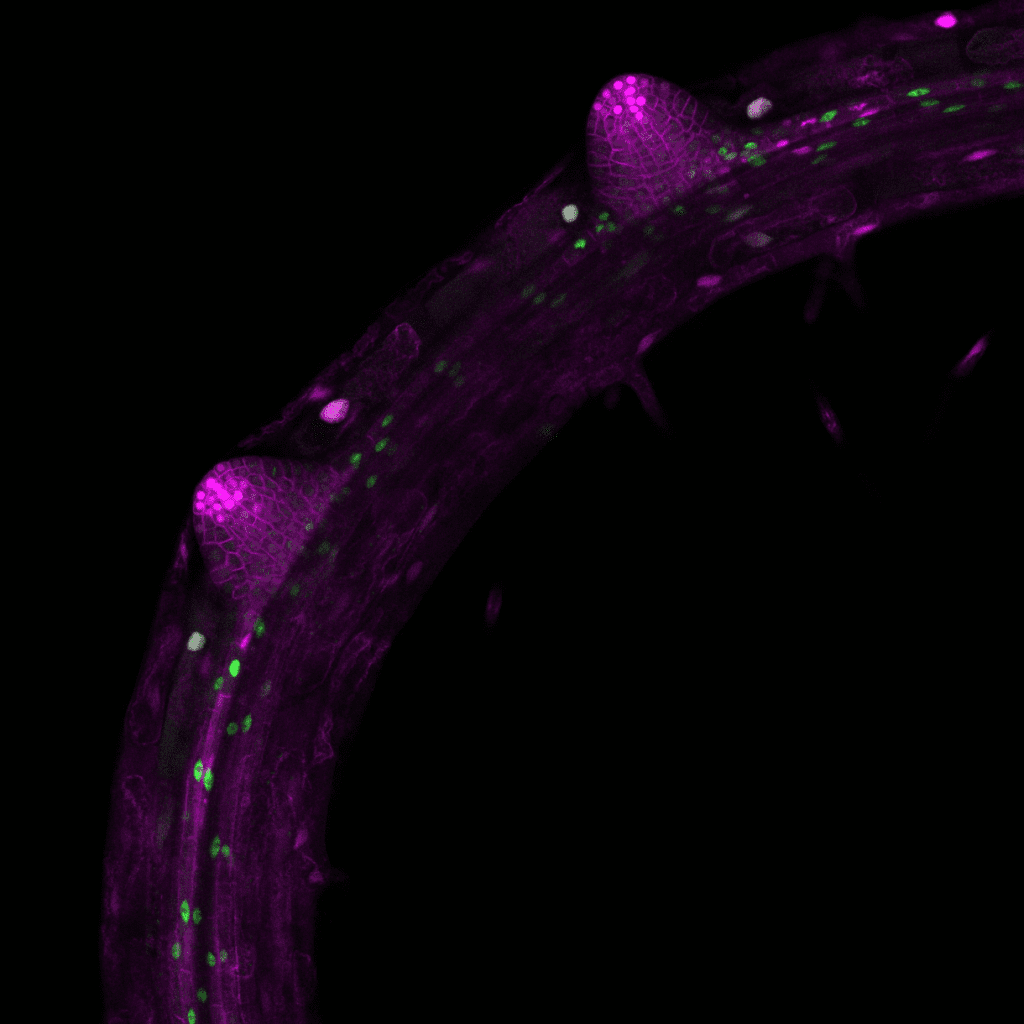

Biologists Identify the Molecular Mechanism That Controls Root Branching

Along with sugar reallocation, a basic molecular mechanism within plants controls the formation of new lateral roots. An international team of plant biologists has demonstrated that it is based on the activity of a certain factor, the target of rapamycin (TOR) protein. A better understanding of the processes that regulate root branching at the molecular level could contribute to improving plant growth and therefore crop yields, according to research team leader Prof. Dr. Alexis Maizel of Heidelberg University.

Good root growth ensures that plants can absorb sufficient nutrients and grow, thus contributing to their general fitness. To do that, they must align the available resources from metabolic processes with their genetic development programs. Plants bind carbon dioxide (CO2) from the atmosphere in their leaves and convert it to simple sugars via photosynthesis. In the form of fructose and glucose, these simple sugars are also allocated in the roots, where they drive the growth and development of the plant.

Prof. Maizel’s team used the thale cress Arabidopsis thaliana, a model plant in plant research, to study how this process occurs at the molecular level. Their investigations focus on what role glucose plays in forming lateral roots. “We do know that, besides plant hormones, sugar from the shoot is also allocated in the roots; but how the plant recognizes that sugar resources are available for forming lateral roots has not been understood thus far,” explained Dr. Michael Stitz, a researcher on the team.

The studies at the metabolism level showed that Arabidopsis forms lateral roots only when glucose breaks down and carbohydrates are consumed in the pericycle — the outermost cell layer of the main root cylinder. This process is controlled at the molecular level by the target of rapamycin protein. This factor controls critical signal networks and metabolic processes in plants as well as in animals and humans. Its activity is governed by the interaction of growth factors like the plant hormone auxin and nutrients like sugar.

Using Arabidopsis, the researchers discovered that TOR becomes active in the pericycle cells only when sugar is present there. So-called founder cells then form the lateral roots through cell division. “TOR assumes a kind of gatekeeper role; when the plant activates the genetic growth program responsible for root formation via the hormone auxin, TOR checks whether there are sufficient sugar resources available for this process,” Prof. Maizel said.

TOR acts by controlling the translation of specific auxin-dependent genes, blocking their expression if there aren’t sufficient sugar resources available. When the researchers suppressed TOR activity, no lateral roots were formed. “That suggests that a fundamental molecular mechanism is involved,” Prof. Maizel said.

At the same time, the researchers demonstrated that TOR controls, via a similar mechanism, the formation of roots from other plant tissues — the so-called adventitious roots. The results from their investigations could also be of interest for agricultural applications.